TL;DR

The last few months have seen a heated debate in AI circles about the Scaling Hypothesis, which argues that large enough models may be capable of achieving artificial general intelligence. We survey some of the recent evidence for and against this hypothesis and argue that while there is compelling evidence for the effects of scale, the field of AI is close to hitting fundamental hardware limits that will prevent a superintelligence explosion.

How Intelligent are Large Models?

Over the last few months, there has been a raging debate in AI circles about the intelligence or lack thereof for large models. The last few months have seen a wave of ever more powerful image generation models that show amazing ability to generate rich sophisticated images on user demand.

At the same time, there are many known failures of these models. Google’s blog post itself highlights some classes of failure such as “color bleeding”, “incorrect spatial relations,” “improper handling of negation” and many more.

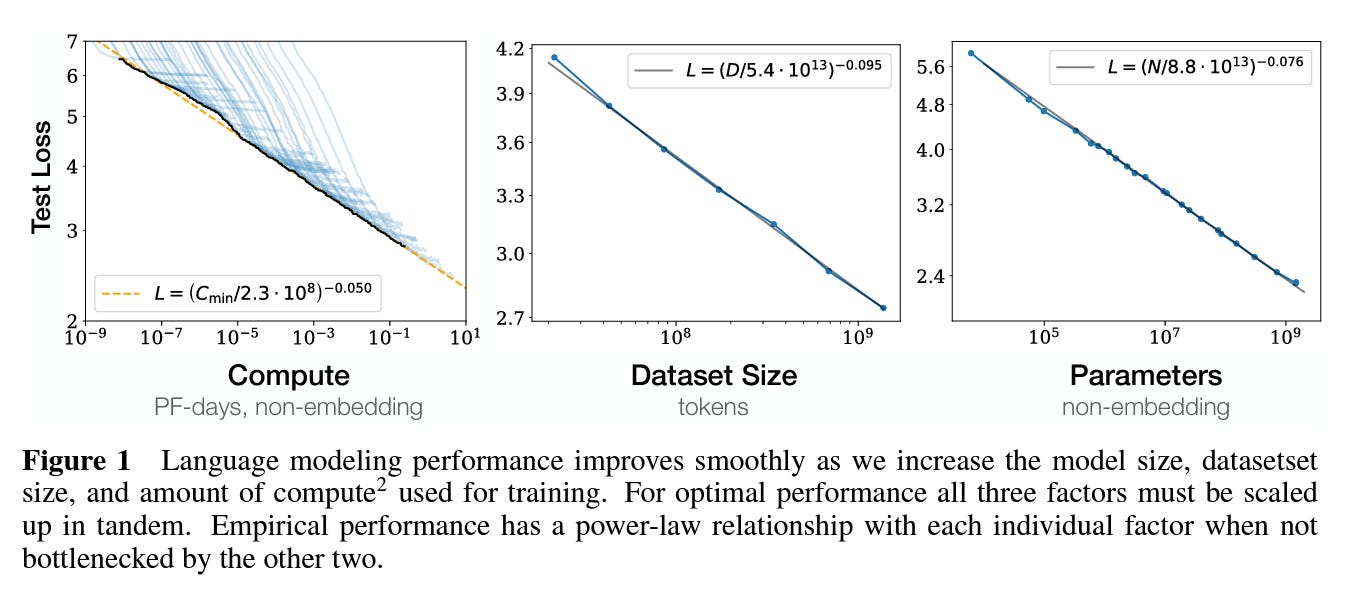

Gary Marcus has provided a compelling analysis of the systematic limitations on his Substack (see his Horse Rides Astronaut post, or a more recent essay about relevant ideas from linguistics). Marcus argues that these failures are indicative of a fundamental failure in current paradigms and that we need to go back to the drawing board to make progress. A blog post by Yann Lecun and Jacob Browning agrees that limitations exist, but argues that these limitations are “hurdles” and not “walls.” Multiple lines of evidence suggest that various “neural scaling laws” exist and provide a clear pathway to improvements, at least for the short term. Early evidence also suggests that large models exhibit “emergence” in that there are capabilities that arise rapidly at sufficient scale which don’t at smaller scales.

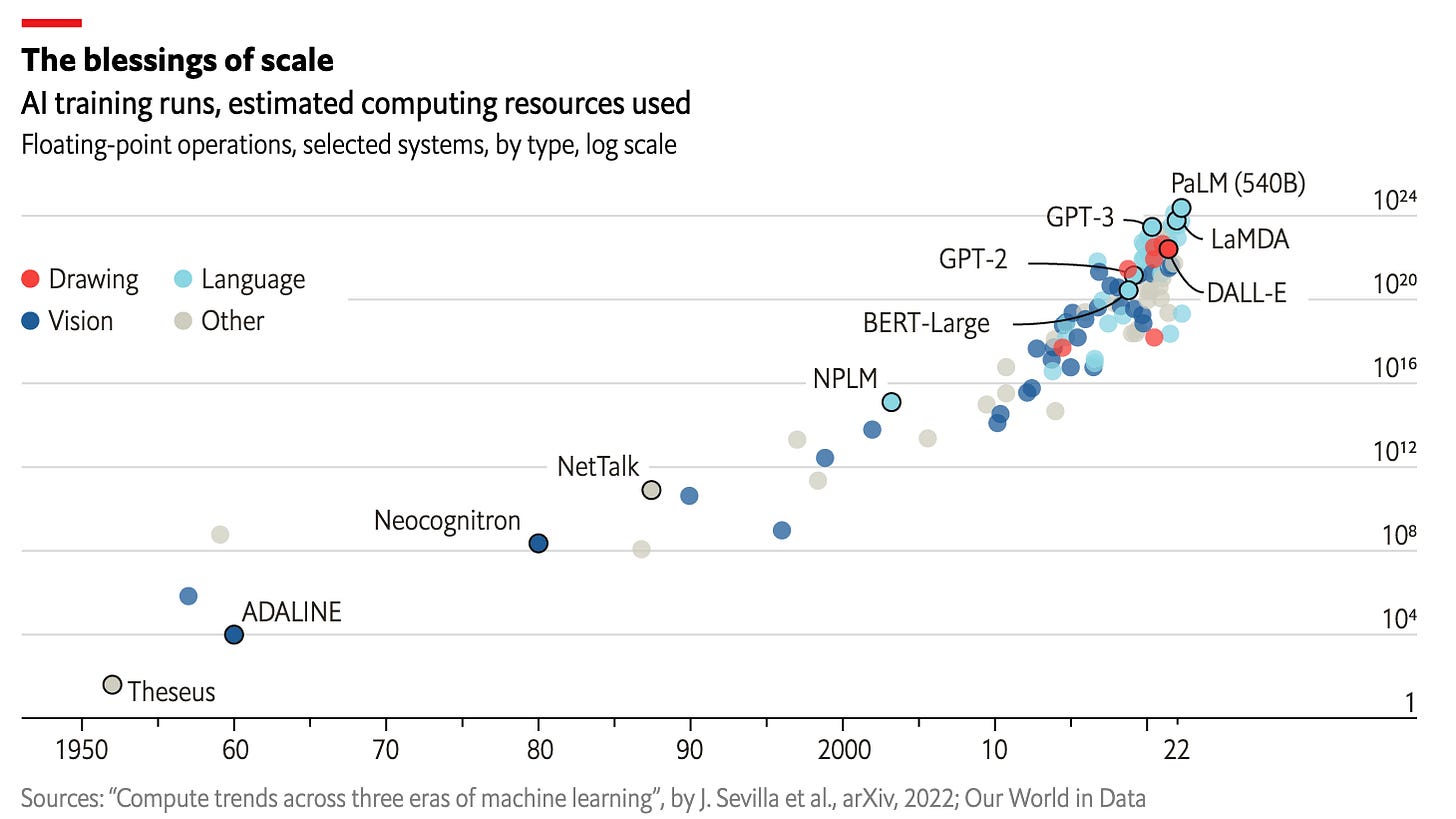

Does the scaling hypothesis hold true? Will large enough language models exhibit sentience? These are the possibly trillion dollar questions facing the AI industry today. As an AI researcher, I believe a weak version of the scaling hypothesis. That is, large models exhibit interesting properties which justify additional scientific exploration. These large models also seem to lead to multiple naturally commercializable products, such as the recently launched Github Copilot, which charges $10/month to provide programmers an autocomplete capability powered by large language models. At the same time, today’s large models are getting so large that they are nearing the peak of human compute capabilities. The plot below from the Economist shows how the amount of compute used has grown at a staggering rate.

As a result, while it may have been true to argue a few years ago that there was plenty of room to scale, I believe that we are only a few years from a point where the largest models will have maxed out the largest available supercomputers. At that point, progress in AI will turn into a hardware problem rather than a software problem which will naturally slow the rate of progress. Six years ago, I wrote an essay, The Ferocious Complexity of the Cell, which argued that about 30 orders of magnitude of scaling would be needed to understand the brain. Progress in intelligent systems has been much faster than I would have then expected, but I believe that we are still many orders of magnitude away from matching the human brain. The hard problems of physical foundry optimization remain a formidable barrier between today’s AI and potential superintelligence. The slowdown of Moore’s law acts as a formidable counterweight to emergent scaling curves. Some researchers suggest that scaling AI systems will require fundamental reworking of computer architecture paradigms

We have covered the challenges of semiconductor scaling extensively on this newsletter. See for example:

While improvements in AI chip design will lead to speedups, the fundamental physical challenges involved in shrinking transistors will likely eventually yoke AI progress to the progress of semiconductor physics.

For this reason, I don’t personally find work on AI alignment compelling, especially compared with more immediate problems such as profound misuses of AI for mass surveillance by authoritarian regimes.

Malignant AI could pose a challenge one day, but rising fascism, climate change, and military threats by authoritarian powers such as Russia and China loom as much more serious short-term challenges.

Interesting Links from Around the Web

https://www.extremetech.com/gaming/337390-first-intel-arc-a380-desktop-benchmarks-are-disappointing: Intel’s first desktop GPUs show disappointing benchmarking results.

Feedback and Comments

Please feel free to email me directly (bharath@deepforestsci.com) with your feedback and comments!

About

Deep Into the Forest is a newsletter by Deep Forest Sciences, Inc. We’re a deep tech R&D company building Chiron, an AI-powered scientific discovery engine. Deep Forest Sciences leads the development of the open source DeepChem ecosystem. Partner with us to apply our foundational AI technologies to hard real-world problems. Get in touch with us at partnerships@deepforestsci.com!

Credits

Author: Bharath Ramsundar, Ph.D.

Editor: Sandya Subramanian, Ph.D.